Ensuring optimal crawlability is paramount for any website striving to achieve visibility and success in search engine results. However, numerous technical issues can hinder a website’s ability to be effectively crawled and indexed by search engines. In this guide, we will delve into nine common crawlability problems that websites encounter and provide actionable solutions to address them. By implementing these strategies, website owners and webmasters can enhance their site’s visibility, improve search engine rankings, and ultimately attract more organic traffic. Join us as we explore the intricacies of crawlability optimization and empower your website to fix 9 crawlability problems and reach its full potential on the digital landscape.

What Are Crawlability Problems?

Crawlability Problems are issues that prevent search engines form accessing your website’s pages. Search Engines like Google, Bing, Yahoo, Yandex, etc. use automated bots to reat and analyze your website pages, this is called crawling.

How Do Crawlability Issues Affect SEO (Search Enginge Optimization)?

Crawlability Problems can drastically afftect your SEO game.

Why?

Beacuse Crawlability problems make it so that some or all of your website pages are paractically invisible to search engines.

1- Pages Blocked In Robots.txt

Search Engines first look at your robots.txt file. This tells them which pages they should and shouldn’t crawl. If your robots.txt file looks like this, it means your entire website is blocked from crwaling.

User-agent: *

Disallow: /Fixing this problem is simple. Just replace the “Disallow” directive with “Allow“. Which should enable search engines to access your entire website.

User-agent: *

Allow: /2- Nofollow Links

The nofollow tag tell search engines not to crawl the links on webpage and the tag look like this:

<meta name="robots" content="nofollow">If this tag is present on your pages, the other pages that they link to might not get crawled. Which creates crawlability problems on your site. Check for nofollow links like with SEMRush’s Site Audit tool.

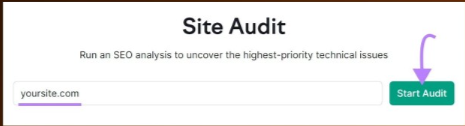

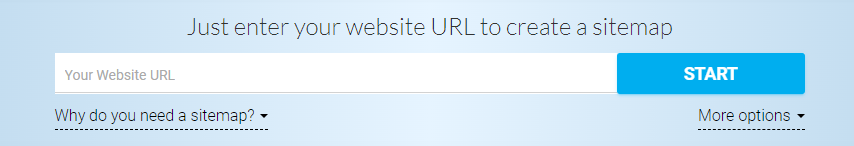

Open the tools, enter your website URL and hit enter to “Start Audit“.

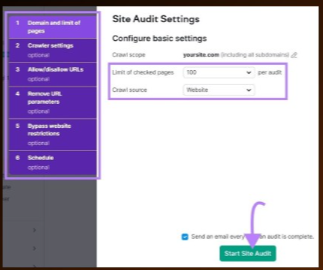

The “Site Audit Settings” window will appear. From here, configure the basic settings and click “Start Site Audit“.

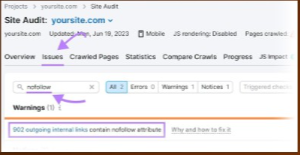

Once the audit is complete, navigate to the “Issues” tab and search for “nofollow“.

3- Bad Site Architecture Low Links

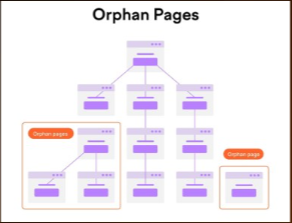

Site Architecture is how your pages are organized across your website. A good site architecture ensures every page is just a few clicks away form the homepage and that there are no orphan pages (i.e., pages with no internal links pointing to them). To help search engines easily access all pages.

But a bad site architecture can create crwalability issues. Notice the example site structure depicated below. It has orphan pages.

Because there’s no linked path to them from the homepage, they may go unnoticed when search engines crawl the site.

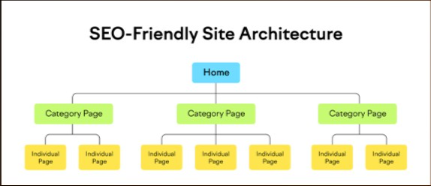

The Solution is straightfowrward: Create a Site Structure that logically organizes your pages in a hierarchy thtough internal links.

In the example above, the homepage links to category pages, which then link to individual pages on your site, And this provides a clear path for crawlers to find all your inportant pages.

4- Lack of Internal Links

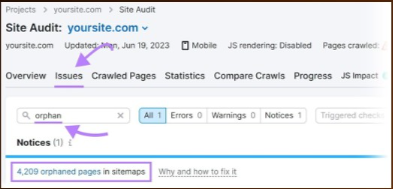

Pages without internal links can create crawlability problems. Search Engines will have trouble discovering those pages. So, identify your orphan pages and Add internal links to them to avoid crawlability issues. Configure the tool to run your first audit. Then, go to the “Issues” tab and search for “orpahn”. You’ll see whether there are any orphan pages present on your site.

To solve this problem, add internal links to orphan pages from other relevant pages on your site.

5- Bad Sitemap Management

A sitemap provides a list of pages on your site that you want search engines to crwal, index, and rank. If your sitemap excluded any pages you want to be found, they might go unnoticed and create crawlability issues. A tool such as XML Sitemaps Generator can help include all pages meant to be crawled.

Enter your website URL, and the tool will generate a sitemap for you automatically.

Then, save the file as “sitemap.xml” and upload it to the root directory of your website. For example, if your website is www.example.com, then your sitemap URL should be accessed at www.example.com/sitemap.xml.

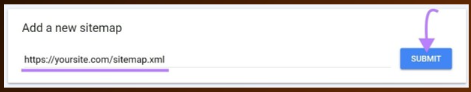

Finally, submit your sitemap to Google in your Google Search Console account.

To do that, access your account. Click “Sitemaps” in the left-hand menu. Then, enter your sitemap URL and Click “Submit”.

6- Noindex Tags

A “noindex” meta robots tag instructs search engines not to index a page. And the tag look like this:

<meta name="robots" content="noindex">Althrough the noindex tag is intended to control indexing, it can create crawlability issues if you leave it on your pages for a long time. Google treats long-term “noindex” tags as nofollow tags, as confirmed by Google’s John Mueller.

Over time, Google will stop crawling the links on those pages altogether. So, if your pages aren’t getting crawled, long-term noindex tags could be the culprit. Identify these pages using SEMRush’s Site Audit tool.

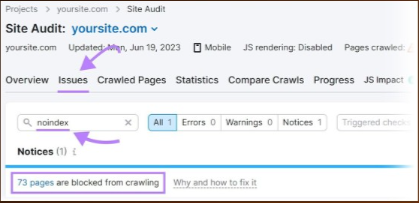

Setup a project in the tool to run first crawl. Once it’s complete, head over to the “issues” tab and search for “noindex”. The tool will list pages on your site with a “noindex” tag.

7- Slow Site Speed

When search engine bots visit your site, they have limited time and resources to devote to crawling commonly referred to as a crawl budget. Slow site speed means it takes longer for pages to load and reduces the number of pages bots can crawl within that crawl session. Which means important pages could be excluded. Work to solve this problem by imporving your overall website performance and speed.

8- Internal Broken Links

Internal Broken Links are hyperlinks that point to dead pages on your site. They return a 404 error like this:

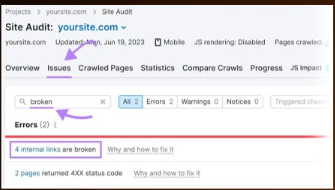

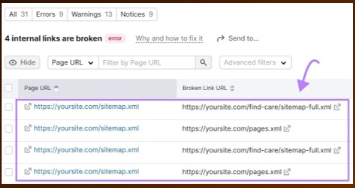

Broken links can have a significant impact on website crawlability. Beacuse they prevent search engine bots from accessing the linked pages. To find broken links on your site, use the Site Audit tool. Navigate to the “Issues” tab and Search for “Broken”.

Next, click “internal links are broken” and you’ll see a report listing all your broken links.

To fix these broken links, substitute a different link, restore the missing page, or add a 301 rediect to another relevant pages on your site.

9- Server-Side Error

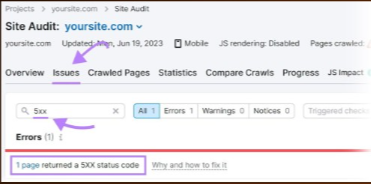

Server-Side Error (like 500 HTTP status codes) disrupt the crawling process because they mean tht server couldn’t fulfill the request. Which makes it difficult for bots to crawl your website’s content. SEMRush’s Site Audit tool can help solve for server-side errors.

Search for “5xx” in the Issues Tab.

if errors are present, click “page returned a 5xx status code” to view a complete list of affected pages. Then, send this list to your developer to configure the server properly.

Post a Comment